Online identity is rapidly becoming the foundation of how organisations verify customers, approve transactions, and manage risk. A name, a face, and an identification document used to be enough to build confidence in a digital interaction. That assumption no longer holds. Generative AI has introduced a new threat vector: synthetic identities that combine fabricated personal details with convincing AI-generated photos. These images are no longer limited to manipulated social media avatars. They now appear in onboarding workflows, remote identity checks, customer support interactions, and even high-stakes verification processes involving payments, loans, or access to sensitive accounts.

The FBI’s Internet Crime Complaint Center has already warned that criminals are using generative AI to create convincing profile photos and falsified identification documents. This trend signals a shift from isolated misuse to a broader, more systemic form of fraud, where synthetic images are designed specifically to bypass the safeguards businesses rely on. As synthetic media continues advancing in realism, organisations must rethink how they authenticate visual evidence and how they determine whether a face or document presented during verification can be trusted.

Deepfake Scams and Synthetic Photos as Infrastructure for Fraud

Generative AI has moved beyond niche experimentation. Fraud networks now use it as infrastructure. Deepfake scams originally focused on manipulating videos, but images have become equally dangerous. AI-generated portraits display realistic lighting, skin texture, and depth. Fabricated IDs imitate the visual structure of government-issued documents closely enough to fool both human reviewers and automated tools built for pre-AI threats.

Synthetic identities are typically assembled in a structured manner. Fraudsters generate a face, create matching documents, design a consistent narrative, and introduce this persona into verification processes. Once accepted, the synthetic identity functions like any other customer account. It can access financial services, conduct transactions, exploit promotions, approach other users on social platforms, or participate in internal corporate interactions.

The scalability is what makes AI identity fraud particularly damaging. Instead of sourcing stolen photos or manually editing existing images, attackers can produce thousands of synthetic identities rapidly. Each one is unique, meaning these identities evade shared fraud databases. As a result, the barrier to launching large-scale fraud operations is significantly lower than before, and the traditional signals businesses look for are becoming less reliable.

Image Verification Is Now Central to Fraud Risk Management

Identity verification systems were built on the assumption that submitted images originated from a camera and represented a real person. Generative AI erases that assumption. A photo may appear natural, but without mechanisms to analyse its authenticity at the source level, organisations are left with a significant blind spot.

Image verification has therefore become a crucial component of fraud risk management. Banks, fintech companies, marketplaces, SaaS platforms, mobility services, dating apps, and gig-economy platforms all rely on remote interactions that depend on trust in visual evidence. Each of these sectors faces the same dilemma: they can no longer evaluate only what the image depicts. They must determine how the image was produced.

This distinction is not abstract. If a selfie or ID photo is synthetic, no underlying identity record exists. The person cannot be matched to government databases, financial histories, or behavioural patterns. The verification process becomes structurally compromised. Synthetic images are, by design, created to bypass systems that rely on face matching, document checking, or manual review. Without authenticity analysis, businesses unknowingly approve customers who do not exist.

The Enterprise Consequences of Synthetic Media in Verification Pipelines

For enterprise environments, synthetic identities create compound risks. Fraudulent accounts established using AI photos can operate for extended periods and interact with legitimate users. They may participate in financial activities, onboard team members, or run internal influence campaigns. Because synthetic faces are unique and have never been used elsewhere, standard fraud signals often fail to identify them.

Regulated industries face additional exposure. KYC and AML obligations require organisations to demonstrate effective mitigation controls. Accepting synthetic images during onboarding introduces compliance failures and can lead to regulatory scrutiny. Financial institutions risk becoming vehicles for criminal activity if they cannot distinguish real documentation from synthetic reproductions.

Operationally, manual teams struggle with these new fraud patterns. Synthetic images contain no obvious editing artifacts, and their overall realism makes them difficult to evaluate without specialised tools. Reviewers spend more time analysing submissions, which slows onboarding, increases cost, and reduces the accuracy of human judgment. Automated authenticity checks are essential to keep verification processes consistent and scalable.

Synthetic Document Fraud: The Next Stage of AI Identity Abuse

Generative AI also impacts document verification workflows. Fraudsters no longer require high-quality printers or physical forgery equipment. They can now generate reliable replicas of ID documents digitally, incorporating micro-details that earlier fraud methods could not replicate. These artificial documents align with synthetic selfies, creating a cohesive identity that appears legitimate across multiple verification steps.

Not all attacks involve fully synthetic material. Some fraud rings combine authentic and artificial elements, modifying IDs or blending real components with synthetic faces. This hybrid approach is particularly difficult to detect, because it borrows from legitimate sources. A document may contain real markers but still present an identity that never existed.

Businesses cannot depend on template matching or pixel-based comparison alone. They need systems that identify whether an image bears the signature indicators of generative AI. The goal is no longer just to spot tampering but to understand whether the original asset was captured by a camera or generated by a model.

How Image Authenticity Detection Creates Operational and Compliance Integrity

To address these challenges, identity verification workflows increasingly incorporate image authenticity detection. Instead of analysing the visible contents of an image, the technology evaluates deeper statistical and structural properties that reveal clues about its origin. These include texture distributions, generative model traces, lighting uniformity patterns, and the presence or absence of camera-sensor characteristics.

Authenticity detection becomes an upstream safeguard. When a user submits a selfie or ID, the system first evaluates whether the image is AI-generated or altered. Only after passing this step does the photo proceed to face matching, document reading, or human review. This protects the business by isolating synthetic attempts before they enter downstream systems.

From a compliance standpoint, this approach strengthens KYC and AML controls. Regulators expect that verification systems reflect modern risk realities. Deploying authenticity detection demonstrates that a business is proactively addressing emerging fraud techniques rather than relying on outdated assumptions. It reduces regulatory exposure by offering a measurable and auditable layer of security.

Operationally, authenticity analysis improves throughput. Review teams receive fewer questionable submissions, automated tools make clearer decisions, and onboarding remains efficient even as fraud attempts increase. This leads to lower fraud losses, reduced manual overhead, and more predictable verification outcomes.

Where WasItAI Fits Within Enterprise Fraud Prevention Strategies

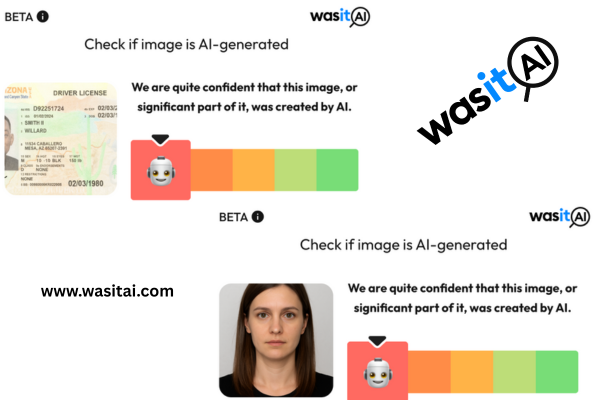

Businesses confronting AI identity fraud increasingly turn to specialised tools built specifically for detecting synthetic images. WasItAI supports this need by analysing uploaded photos, including selfies, document images, and profile photos, to determine whether they originate from generative AI.

For organisations, this provides a decisive first line of defence. Instead of evaluating whether an image looks realistic, WasItAI determines whether it is authentic. If a photo contains synthetic elements or indicators of AI manipulation, it can be flagged or rejected before it reaches verification systems. This protects onboarding pipelines, marketplace listings, account creation processes, and any workflow that depends on visual trust.

WasItAI’s technology also assists in environments where fraudulent participants attempt to scale operations. Because synthetic identities can be generated in large volumes, companies need automated solutions capable of screening images quickly and consistently. WasItAI helps maintain the credibility of verification pipelines, enabling platforms to grow without increasing fraud exposure.

For regulated businesses, deploying authenticity detection enhances compliance credibility. It demonstrates that the organisation is responding to the rise of synthetic media with appropriate controls. This is increasingly important as regulators monitor how companies adapt to AI threats affecting customer identification procedures.

Strengthening Digital Identity Infrastructure for an AI-Driven Fraud Landscape

Synthetic identities created through deepfakes and AI photos represent a defining challenge for digital verification systems. Fraudsters now operate at a scale and precision that traditional methods cannot address without additional layers of defence. Businesses that depend on remote identity confirmation must recognise that image-based trust is no longer guaranteed.

By adopting image authenticity detection, organisations reinforce the integrity of their verification frameworks. They prevent synthetic media from entering their systems, reduce fraud losses, and protect their users from engagement with fabricated identities. Most importantly, they preserve the reliability of digital identity in an environment where generative AI is reshaping the threat landscape.

For companies that rely on identity trust, whether in finance, marketplaces, mobility, or community-driven platforms, strengthening image verification is no longer optional. It is a strategic requirement. Solutions such as WasItAI equip organisations to operate confidently despite the rapid evolution of synthetic media, ensuring that genuine users receive secure and seamless access while fraudulent identities are intercepted before they cause harm.