Artificial intelligence has forced many industries to rethink authenticity, but journalism has become its most vulnerable target. What once served as the foundation of factual reporting – the photograph – is now being destabilized by synthetic visuals capable of mimicking reality with unnerving precision. The infiltration of AI-generated images into real news coverage is no longer an emerging threat; it is already reshaping editorial workflows, public trust, and the credibility of entire media institutions.

In the past year, newsrooms across continents have found themselves unwitting participants in a credibility crisis. Visuals circulating on social platforms during moments of global tension have slipped into professional reporting, sometimes through user submissions, sometimes through wire agencies, and sometimes through the pressure of publishing rapidly in breaking-news cycles. When reputable outlets retract images later revealed as AI-generated, the consequences extend far beyond embarrassment. Each instance chips away at the fragile bond between media and the public, reinforcing a disquieting question: if even journalists cannot tell what is real, how can audiences?

How AI-Generated News Photos Enter the Media Ecosystem

The creation and spread of AI imagery have accelerated dramatically with the rise of diffusion models, placing newsrooms in a precarious position. A recent academic study on AI-generated disinformation highlighted a growing “authenticity gap” where technological advancements in image generation have begun to outpace detection capabilities. The study noted that these images are increasingly indistinguishable from real photography, especially when depicting conflict zones, humanitarian crises, or explosive political moments.

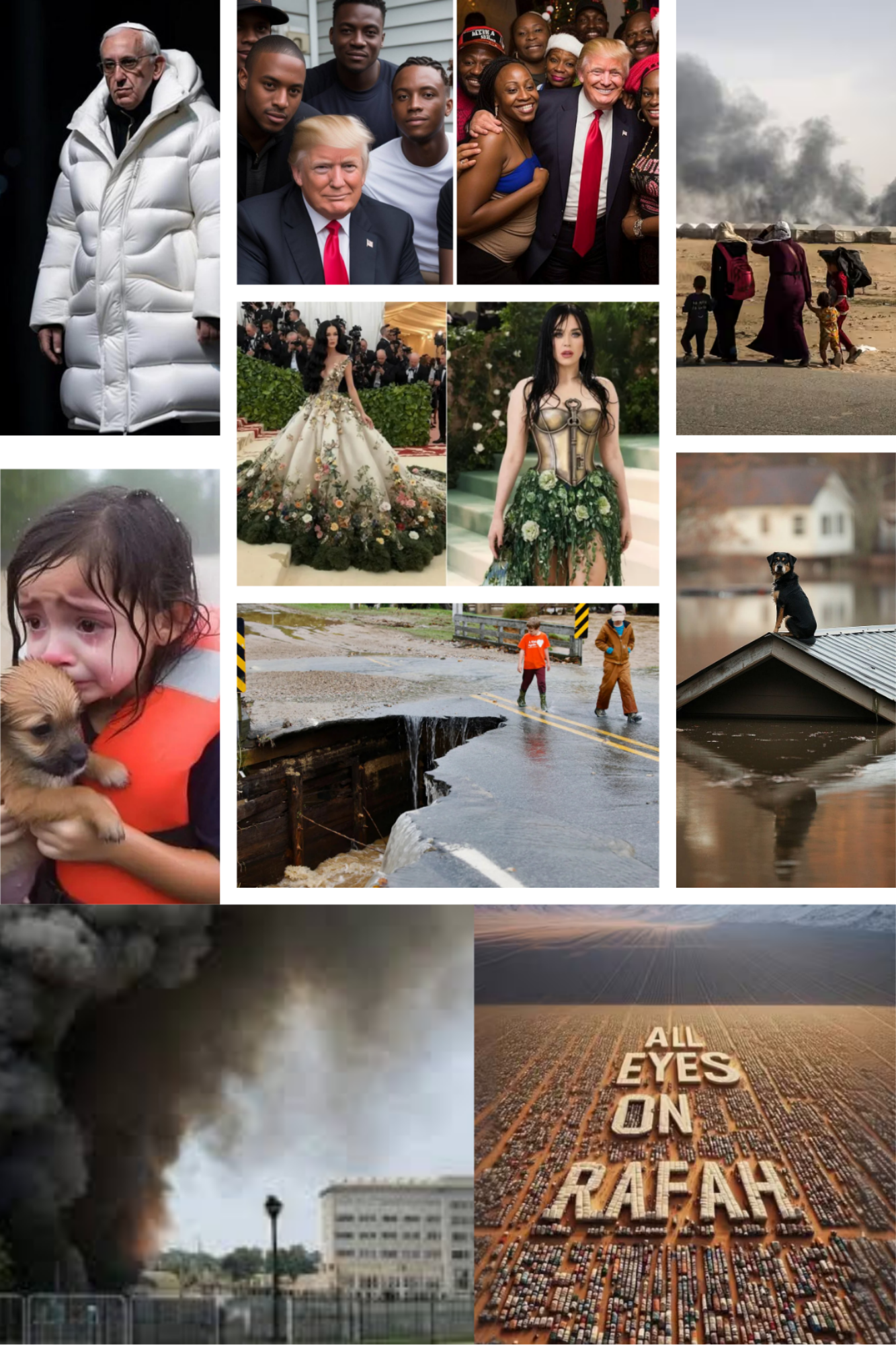

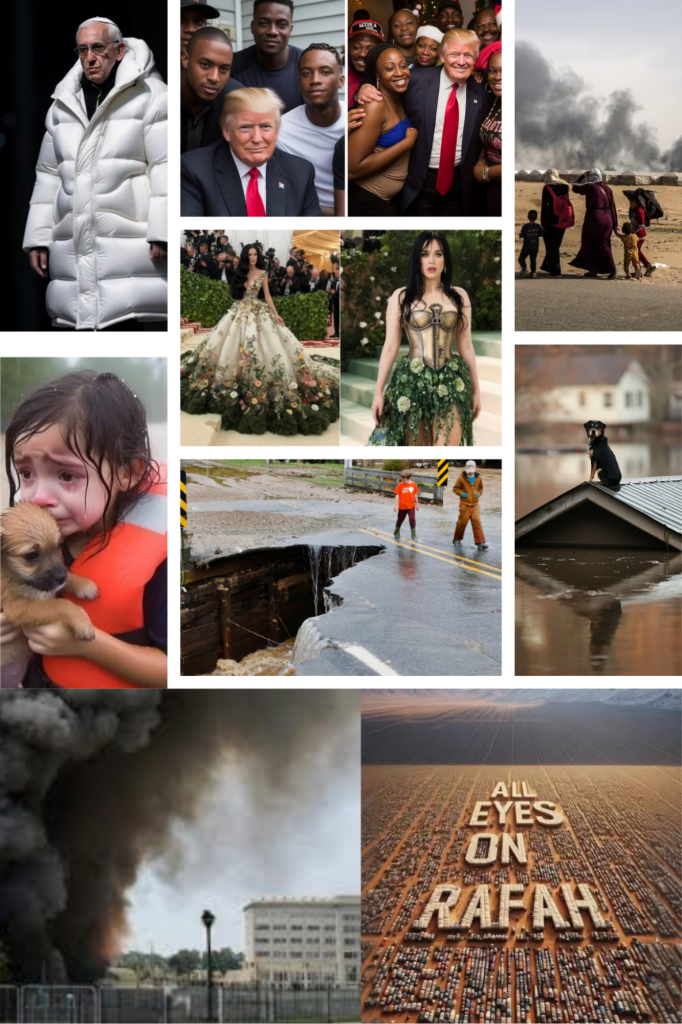

Nowhere has this been more evident than in global crisis reporting. The Rafah conflict became a watershed example when emotionally charged visuals of devastation circulated widely online. Many of these images were later identified as AI-generated, their inconsistencies uncovered only after millions had already viewed and shared them. The public exposure far exceeded the reach of the eventual corrections, demonstrating how misinformation spreads asymmetrically and how slow traditional verification can be in the face of viral content.

High-Profile Cases That Exposed Media Vulnerability

The global media sphere witnessed another instructive incident when a major Asian news outlet published an image of a humanitarian rescue operation – one that appeared authentic at first glance. The photograph originated from social media, where it had amassed significant engagement during a breaking crisis. Editors scrutinized it, yet subtle problem areas: unusual reflections, unnatural lighting transitions, and the faint “plasticity” characteristic of diffusion models – went unnoticed. Days later, external analysts flagged the image as AI-generated. The outlet swiftly retracted it and issued a public apology. But audiences were left with a lingering question: how many other images published worldwide have escaped notice?

The political sphere has proven equally susceptible. During recent election cycles, AI-generated campaign images and fabricated political events circulated widely. Some of these made their way into smaller news portals lacking the technical infrastructure for deep visual authentication. Even after being debunked, they showcased how AI-generated media can distort public perception and infiltrate legitimate reporting streams.

The Loss of Public Trust Through AI-Generated Imagery

These incidents collectively reveal a deeper and more concerning trend. When audiences see journalists struggling to authenticate visuals, confidence in the entire information ecosystem falters. Research on media trust paints a clear picture: when imagery appears unreliable, skepticism extends to written reporting as well. Because photos carry emotional weight and immediacy, their contamination by synthetic media triggers a broader erosion of trust.

Journalists themselves express growing unease. Many have reported spending extensive time scrutinizing photos, second-guessing their editorial decisions, or delaying publication while seeking additional verification. While necessary, these delays place journalists at a competitive disadvantage during fast-evolving events where speed often defines audience reach. The pressure creates a dangerous environment where mistakes are more likely, and even one misstep can severely damage the outlet’s reputation.

Why Traditional Verification Is No Longer Enough

Reverse image searches and metadata analysis once formed the backbone of newsroom verification protocols. But today’s generative models produce images devoid of provenance data, with metadata often nonexistent or fabricated. They can replicate lens distortions, lighting patterns, and camera-like imperfections once used as authenticity markers.

Visual instincts – the expert eye – are no longer sufficient. AI images may contain errors, but those errors are now buried in microscopic patterns invisible without specialized analysis. Diffusion models have become adept at mimicking camera optics, shadows, and textures with alarming realism. As a result, journalists armed only with traditional tools find themselves in a perpetual race they cannot win.

How Image Verification Tools Are Becoming Essential for Journalism

In this new media environment, AI-powered image verification tools have become indispensable. Rather than relying solely on human inspection, these systems incorporate advanced machine-learning detection techniques to flag patterns indicative of synthetic generation. They do not search for individual visual “tells” but rather analyze statistical fingerprints, generative noise signatures, and anomalies that differentiate human-captured images from those produced by neural networks.

WasItAI was specifically developed to address this escalating threat. The tool offers rigorous detection capabilities designed to fit seamlessly into newsroom environments. Whether integrated through an API, used via a web interface, or implemented as a browser extension for rapid verification, WasItAI functions as a checkpoint that newsrooms can activate before publishing. The system evaluates an image and identifies whether it originated from a human camera or an AI model, providing an additional layer of protection against accidental disinformation.

Strengthening Editorial Ethics Through Verification Protocols

However, the adoption of image verification tools has implications beyond technology. They contribute to a culture of ethical rigor – one where journalists understand that every visual must undergo verification, not merely those that “look suspicious.” This shift builds internal accountability and reinforces a culture of diligence that can help reclaim public trust.

Transparency becomes a vital component of this process. When media organizations openly discuss the steps they take to authenticate visuals, including their use of advanced tools such as WasItAI, they demonstrate to audiences that they take misinformation seriously. Such transparency helps counter the growing cynicism surrounding digital media and reinforces the legitimacy of serious journalism.

The Future: A Race Between Generative Models and Detection Tools

Generative AI models will continue to evolve, producing even more realistic imagery and eventually pushing into video, audio, and synthetic news broadcasts. This trajectory underscores why ongoing innovation in image verification is critical. Journalists will need detection solutions that evolve alongside generative technologies, adapting continuously to new forms of manipulation.

Yet the core principle remains unchanged. Journalism’s value lies in its commitment to truth. Technology may complicate that mission, but it cannot redefine it. The presence of synthetic images in news reporting represents a challenge, not an inevitability. With the right tools, rigorous editorial protocols, and a renewed commitment to verification, media organizations can defend the integrity of the visual record.

Rebuilding Trust in an Era Where Seeing Is No Longer Believing

As AI-generated news photos continue to circulate through the global information ecosystem, the stakes have never been higher. Media outlets can no longer rely on intuition or the assumption that an image is genuine simply because it appears plausible. Verification must become an essential and fully integrated part of every newsroom’s workflow.

WasItAI’s approach provides a practical and reliable safeguard at a time when journalism cannot afford errors. Through consistent use of image verification tools, transparent communication with audiences, and disciplined editorial standards, newsrooms can strengthen their defenses against synthetic media. In doing so, they not only protect themselves from reputational damage but also reaffirm their role in society as reliable intermediaries of truth.

In an age where seeing is no longer believing, trust must be rebuilt image by image, and the effort to preserve journalistic integrity begins with verification.