It used to be simple. A customer sent a photo of a damaged item, and a merchant issued a refund – because, well, the proof was right there in the picture.

But in 2025, “seeing is believing” doesn’t hold up like it used to. Thanks to generative AI, anyone can make an image look exactly the way they want – including damage that never actually happened. And that’s opening a brand-new chapter in refund fraud.

AI image tools are no longer just for artists or marketers. They’ve become a new weapon for scammers – helping them fake photos, receipts, or packaging to squeeze refunds from honest sellers.

So let’s unpack what’s really going on, why it’s spreading so quickly, and what sellers can do to protect themselves without turning every customer into a suspect.

The New Face of Refund Fraud

Refund abuse has been around as long as online shopping itself. There have always been people who claim a package never arrived, or that something was “broken on delivery” when it wasn’t.

But the difference now is how convincing those claims can look. AI image generators make it ridiculously easy to create “evidence.”

With just a few clicks, someone can:

- add scratches or dents to a photo of a product,

- make a fake image of a shattered item, or

- generate a completely new picture showing something that never existed.

No photo editing skills needed, just a prompt like “make this baby set look dirty and torn,” and the AI does the rest.

For merchants, this means the usual photo-based verification no longer guarantees the truth. What looks like clear evidence of damage could be a digital illusion.

Why This Works So Well for Fraudsters

A big part of the problem is how returns work today. Most online stores want to make the process as fast and easy as possible, which is great for real customers, but also great for fraudsters.

When refunds are issued before items are inspected (or when a photo alone is accepted as proof), there’s room for abuse. And when that photo can now be AI-made or AI-enhanced, the risk skyrockets.

There’s also another factor: AI doesn’t just make fake images – it makes believable ones. Many tools are so advanced that even trained eyes can struggle to tell what’s real. Unless you’re running specialized analysis, these images can easily slip through.

And because refund abuse is relatively low-risk – few scammers get caught – it’s becoming a fast-growing form of digital fraud.

The Real-World Cost for Businesses

Refund fraud isn’t just about one lost product. It adds up quickly.

Retailers not only lose the item’s value but also pay the shipping, restocking, and customer support costs that come with every false claim. Over time, that can mean thousands, even millions, in unnecessary losses.

But the hidden cost is trust. To fight fraud, many sellers tighten their policies: more forms, more photos, slower approvals. Unfortunately, that can frustrate legitimate buyers. It’s a delicate balance between staying safe and staying customer-friendly.

The Visual Trust Problem

For years, “send us a photo” was the gold standard of proof. It was quick, fair, and seemed objective.

Now that same system is the weakest link.

AI image tools are trained to create pictures that look exactly like camera photos. They even mimic lighting inconsistencies, shadows, and realistic angles. Some can even modify metadata – meaning you can’t rely on file details to spot the fakes anymore.

So when a refund claim arrives with a photo of a cracked phone screen, how do you know if that crack is real?

That’s the challenge facing sellers today: visual trust has eroded. And without new ways to verify authenticity, fraudsters have an open door.

Smarter Ways to Fight Back

Stopping AI-driven refund fraud isn’t about distrusting every customer. It’s about building smarter systems that make fraud harder, and honesty easier.

Here are a few ways to start.

1. Ask for more context, not just a picture

Instead of relying on one static photo, request short videos, multiple angles, or “live” photos. It’s much harder for an AI model to consistently fake those.

For example, asking a customer to record a quick video showing the defect while moving the item under the light can reveal inconsistencies AI tends to miss.

2. Add an image-verification step

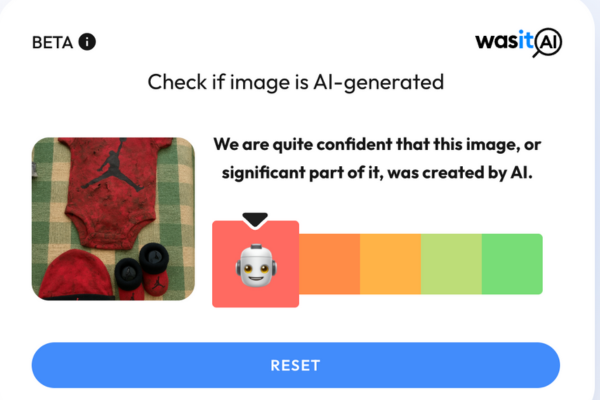

There are emerging tools (like our own WasItAI detector) that can tell whether an image was AI-generated or AI-altered. They’re not foolproof, but they can flag suspicious cases for manual review.

Even if you don’t use automation, training your team to look for telltale signs – strange edges, odd lighting, repeating textures – can make a big difference.

3. Combine visual checks with behavioral insight

Look at patterns:

- Is this a new customer making multiple “damaged item” claims?

- Are refunds requested for high-value products more often than usual?

- Does the same account reuse photos from past orders?

AI images are powerful, but they still need humans to submit them. That’s where pattern recognition can help expose repeat offenders.

4. Keep your refund policy fair, but smart

You don’t need to punish everyone to stop a few bad actors. Instead, design flexible policies that tighten controls only when the risk is high.

For example:

- Offer instant refunds for low-value items but require returns or manual review for high-value ones.

- Clearly state that all image submissions may be verified for authenticity. Just knowing that can discourage attempts.

Transparency can be one of your strongest deterrents.

How AI Detection Can Help

Tools that identify AI-generated content are becoming an important piece of the fraud-prevention puzzle.

By analyzing tiny details invisible to the human eye, like pixel patterns or digital “fingerprints”, these tools can reveal whether an image came from a camera or a generative model.

That’s exactly what WasItAI was built for: to restore trust in visual content.

Imagine receiving a refund claim photo and running it through an AI-image detector. Within seconds, you’d know whether that picture came from a camera or was likely generated or edited by AI.

It’s not just about catching fraudsters, it’s about protecting your business from having to second-guess every single customer photo.

Building a Culture of Caution (Without Paranoia)

Technology alone won’t solve the problem. You also need awareness – across your team and your customers.

Customer support agents should know what to look for when reviewing claims. A little training on how AI fakes work can help them spot suspicious images faster.

At the same time, communicate your policy openly. Most customers appreciate knowing that you take fraud seriously, especially if it helps keep prices fair and refunds fast for honest buyers.

Fraud thrives on complacency. When everyone in your company understands that photos can lie, you’ve already closed one major gap.

The Road Ahead

Generative AI is here to stay, and so are the scams that come with it. As tools become more advanced, fake images will only get harder to spot.

But there’s a silver lining: awareness is spreading just as fast. More merchants are testing image-verification technology, and new partnerships are forming between fraud-prevention platforms and AI-detection services.

The future of e-commerce won’t be about eliminating risk entirely – that’s impossible. It’ll be about managing it intelligently, using every tool available to stay one step ahead.

Refund fraud powered by AI images isn’t just a tech issue – it’s a trust issue. Sellers, marketplaces, and consumers all rely on the assumption that what they see is real.

Now, that assumption needs backup.

By blending smarter verification, better tools, and transparent communication, businesses can protect themselves without losing the human touch that makes good customer service what it is.

AI may be rewriting the rulebook for fraud, but it’s also giving us the means to detect it, and to keep truth on our side.