For years, financial institutions have relied on a simple assumption: a real human stands behind every selfie used in a Know Your Customer (KYC) check. The photo a user submits – whether a face scan, a video blink test, or an image of an ID – has long been treated as one of the strongest signals of legitimacy. But a new generation of generative AI tools is breaking that trust. A convincing identity can now be created out of thin air, complete with a flawless selfie, a matching ID document, and even a short video that mimics natural movements. The face you see on the screen may not belong to anyone who has ever existed.

This is not a theoretical threat. Financial regulators, banks, and fintech providers are already reporting sharp increases in synthetic identity attacks powered by AI. Fraud rings that once needed stolen photos or physical IDs can now fabricate entire personas with little more than a prompt and a GPU. Their creations look perfectly normal – normal enough that many verification systems, including commercially deployed facial recognition models, simply let them through.

The rise of AI-generated faces is quietly changing the battlefield of digital identity. And if the industry does not adapt quickly, the basic mechanics of onboarding could become dangerously unreliable.

The New Face of Fraud Is Not Human

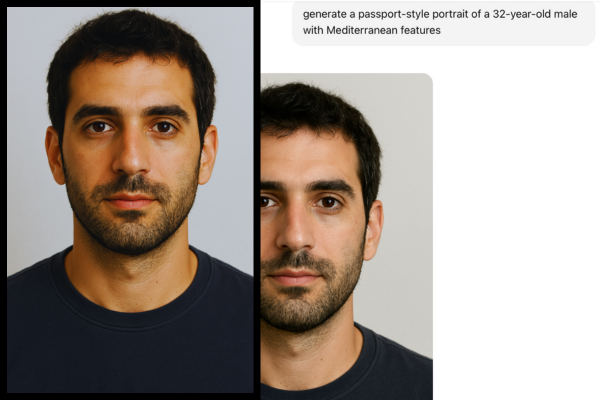

Generative AI models specializing in faces, headshots, and ID-style photography have reached a level of realism that is difficult to distinguish from genuine images. Many of the tools used to create these synthetic identities are open-source, fast, and require no technical expertise to operate. Type “generate a passport-style portrait of a 32-year-old male with Mediterranean features” into the right model and within seconds you have a high-resolution face that appears plausible in every respect.

TechCrunch recently put it bluntly: generative AI has the potential to make KYC “effectively useless.” The article describes how bad actors now generate thousands of unique faces at scale, each with consistent lighting, proper alignment, and the subdued expressions typical of identity photos. These images do not trigger red flags because they do not resemble manipulated content. They are not Photoshop jobs; they are fully synthetic, often cleaner and more uniform than a real camera capture.

FDM Group’s analysis reaches similar conclusions. AI-generated deepfakes can replicate both faces and documents, enabling fraudsters to pass verification steps that were once considered secure. What used to require a stolen ID and risky physical interaction has become an entirely digital crime, where no real person needs to be involved.

Why Traditional KYC Systems Are Losing Ground against AI

Legacy KYC infrastructure has a blind spot: it assumes deception comes from altering a real photo rather than fabricating one from scratch. The models powering facial recognition and document verification are designed to detect inconsistencies: blurring, mismatch between face and ID, visible tampering, or low-quality edits. But synthetic images are not “edited” in the traditional sense. They are created whole, with no trace of manipulation and none of the irregularities one would expect from fake photos.

Some verification systems attempt to counter fraud by requiring liveness tests: asking users to blink, smile, turn their head, or perform short movements. But generative AI has caught up here too. Newer deepfake tools specialize in animating still images, generating realistic micro-movements that mimic human motion. Fraudsters can take a synthetic headshot and convert it into a short responsive video that passes liveness checks with unsettling ease.

Behind the scenes, most identity verification models operate on probability scores rather than absolute certainty. If a face looks like a face and behaves somewhat like a human, it passes. The trouble is that synthetic content now clears those thresholds. And because many institutions run automated KYC checks with minimal human oversight, the fraudulent accounts often enter the system unnoticed.

Banks and fintechs have begun reporting patterns that hint at this shift: multiple new accounts tied to identities that appear consistent yet seem strangely “perfect”; customers passing facial verification despite never appearing in any public record; fraud clusters where dozens of people – none of whom truly exist – open accounts and drain small onboarding bonuses. These synthetic identities are almost like ghosts: complete on paper, fully functional in the system, but with no physical person behind them.

The Cost of an AI Selfie

Synthetic identity fraud is now one of the fastest-growing forms of financial crime. Unlike traditional identity theft, where a criminal impersonates a real individual, synthetic fraud blends real and fake elements – or, increasingly, involves no real person at all. Once a synthetic identity slips through KYC, the attacker can open bank accounts, apply for loans, register SIM cards, and conduct cross-border transfers with little risk. Meanwhile, the institution experiences mounting losses, operational headaches, and regulatory scrutiny.

Insurers face similar threats. Fraudsters submit claims using AI-generated identities, providing staged injuries or fabricated damage photos connected to accounts created with synthetic faces. Because the “customer” technically exists only as pixels, holding them accountable becomes nearly impossible.

The implications extend beyond financial losses. When onboarding systems fail, criminal networks gain access not just to banking services but to the wider digital ecosystem: crypto exchanges, credit platforms, remittance services, online marketplaces. Synthetic faces become the keys that unlock entire infrastructures that depend on trust.

How Image Authenticity Tools Close the Gap

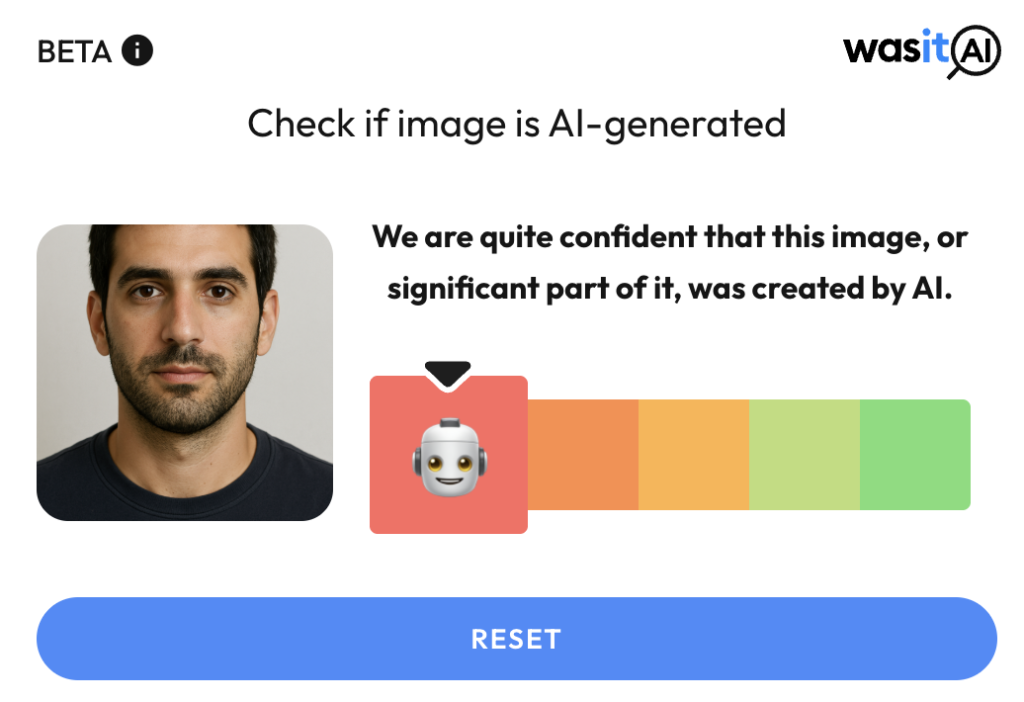

If traditional KYC models are failing because they treat synthetic faces as genuine, the solution lies in going deeper – looking not at whether a face looks plausible, but at whether the image itself shows signs of being AI-generated.

This is where authenticity-detection tools such as WasItAI come into play. Instead of evaluating the identity of the person in the image, they evaluate the integrity of the image itself. Their goal is not to recognize the face but to determine whether the face was ever captured by a real camera in the first place.

Modern image-authenticity systems analyze patterns invisible to the human eye. Generative AI models leave subtle fingerprints: textural inconsistencies, anomalous noise patterns, unnatural edge formations, and lighting uniformities that rarely occur in real photography. Synthetic faces often lack certain biological asymmetries, micro-imperfections, or camera artifacts that human faces naturally possess. While a typical verification model might miss these clues, authenticity tools are trained specifically to detect them.

By integrating authenticity checks into onboarding, institutions add a new defensive layer: before assessing whether the customer is who they claim to be, the system determines whether the photo itself belongs to the real world.

Banks and Fintechs Can No Longer Rely on Selfies Alone

As AI fraud grows more sophisticated, banks, fintechs, and insurers cannot afford to depend solely on facial matching or liveness tests. A synthetic face can pass both. What it cannot easily evade, at least not yet, is advanced AI-detection technology built specifically to spot generative content.

The industry is already inching toward this realization. Some institutions have begun experimenting with authenticity-analysis APIs that run in parallel with their KYC workflow. Others are exploring hybrid approaches that combine metadata analysis, camera-source validation, and deep-learning classifiers designed to differentiate camera-origin images from model-origin ones. But adoption remains uneven, and many organizations still underestimate the scale of the threat.

The challenge is psychological as much as technical. Trust in human faces runs deep, and the idea that an entirely fabricated person could pass onboarding feels like science fiction. Yet the evidence is clear: fraudsters are not just using generative AI – they are industrializing it. Every month brings new tools capable of producing more convincing identities, better deepfakes, and more consistent document simulations.

KYC, in its current form, simply wasn’t built for this era.

A Future Where Authenticity Comes First

The rise of AI-generated identities does not mean that KYC is doomed. Instead, it signals a shift toward a new model of verification – one that treats authenticity as the foundation of trust rather than an afterthought.

In this emerging model, the first question becomes: Was this image genuinely captured from a camera? Only then does the system move on to verifying who the person is.

Tools like WasItAI are part of the infrastructure needed to make that shift possible. They provide a crucial capability that legacy verification systems lack: the ability to distinguish between a real human face and a synthetic one before any matching or recognition takes place.

The institutions that adopt this approach early will be far better positioned to defend against the coming wave of AI-powered fraud. Those that wait may soon find themselves overwhelmed by an influx of synthetic customers, each one a polished illusion crafted by models designed to exploit outdated assumptions.

In this new landscape, the selfie is no longer a simple snapshot of identity. It is a potential attack vector. Ensuring its authenticity may become the most important step in protecting financial systems from a future where anyone, and no one, can be manufactured with a prompt.