Swipe left, swipe right – for millions of singles, dating apps have become a chief venue for meeting new people. But beneath the gloss of glossy profile photos and witty bios, a new menace is spreading: the increasing use of AI to create fake, but highly believable, dating profiles. These AI-generated images, combined with AI-written text, are undermining trust and opening the door to scams. The question now is: whose job is it to police authenticity – and how fast must dating apps act before their users simply give up?

Why AI images are appealing to fraudsters

Historically, catfishing and fake profiles have long plagued dating platforms – misrepresentative photos, stolen images, or entirely fictitious identities. AI changes the game. Now, instead of borrowing someone’s selfies, bad actors can generate photorealistic human faces (or composite bodies) tailored to ideal attractiveness, demographics, and backgrounds. These images can avoid reverse image lookup flags (because they are novel) and still look convincingly real.

That makes them ideal for creating profiles that seem too good to be true – and often are. In some cases, dozens or hundreds of profiles might use similar facial features with slight variations, forming a web of fake accounts.

Anecdotes show this is already happening. On forums like Reddit, users report seeing batches of “AI-generated fake profiles” on apps like Bumble, often all verified by the platform’s own process – which suggests gaps in verification systems. Meanwhile, reviews of dating apps note growing concern about convincing “too perfect” profile pictures with unnatural composition or lighting.

Fake images as gateway to scams

Fake image profiles aren’t just about deception for fun – they are often a tool in romance scams and financial fraud. A typical pattern:

- A fake profile with a polished photo draws in matches.

- The scammer may avoid in-person or live video interaction, citing reasons like travel, remote work, or other excuses.

- Over time, they build trust (compliments, emotional sharing).

- Finally, they ask for money (help with a visa, medical costs, travel, etc.), often coercively or emotionally pressing the target.

Because the face looks “real,” users may let down their guard. Some scams prey on those seeking companionship, love, or emotional connection – manipulative, and potentially costly.

The increase in romance fraud more broadly has been noted by authorities: scammers using dating apps to build long-term relationships with victims is a growing vector of cybercrime. AI-generated photos simply make this easier and harder to detect.

AI beyond images: auto-generated bios, chat, and messages

While fake visuals are especially pernicious, AI also seeps into textual deception on dating apps.

- Bios and prompts: Many users already rely on AI (e.g. ChatGPT or copy-paste prompt tools) to craft their profile text, “About Me” blurbs, or icebreakers. According to The Cut, one survey noted 11% of respondents reported using AI to write dating profiles, and 10% for opening lines. The line between enhancing your writing and misrepresentation can be blurry.

- Chat assistance / persona bots: Some people may even lean on AI to generate their conversation replies (or entire persona scripts) to make themselves sound more witty or fluent. In extreme cases, the “user” may be only partially human — or not human at all.

- Hybrid deception: A profile may use a real photograph (stolen or scraped) but pair it with AI-written text or AI-driven chat, blending real and synthetic elements.

These practices dilute authenticity: how do you know if you are interacting with real intent or a machine playing at courtship? As The Cut article puts it, people already fake a lot; AI just gives them a more powerful tool to smooth over gaps. Some feel as though the experience of messaging has turned into “reading fiction meant to flatter.”

The consequences: diminishing trust, burnout, and decline

When fake images and AI deception become more widespread, several negative effects proliferate:

- User distrust: If too many accounts feel fake, genuine users may become cynical, skeptical or stop trusting photos and bios at all. The platform loses credibility.

- Dating fatigue / burnout: Endless swiping and the sense that “nothing is real” intensifies frustration. Many users already report fatigue with dating apps.

- Lower conversion / engagement: Users may match less, respond less, or drop out entirely. For apps whose business depends on active users, that is a serious risk.

- Victim risk: Some users are exposed to financial or emotional harm via romance fraud, especially when sophisticated deception camouflages the red flags.

- Algorithmic distortion: Platforms may optimize engagement (swipe metrics, match rates) in ways that inadvertently favor fake or sensational profiles – an economic incentive misaligned with authenticity. A critical view in the “Algorithmic Colonization of Love” points out how romantic decision-making increasingly gets delegated to algorithms, which may crowd out real communicative interaction.

Thus the problem is not just a few bad apples, it threatens the entire fabric of trust in online dating.

Who should police authenticity? A question of responsibility

When faced with rampant AI-assisted deception, the natural question arises: who is responsible for verifying profile validity?

1. The dating app’s duty

One argument is that the onus lies squarely on the dating platforms. After all:

- They control onboarding, verification, moderation, and algorithms.

- They benefit directly if users remain active and engaged.

- They are best positioned to deploy technical defenses (image analysis, behavioral modeling, anomaly detection).

- They also carry reputational risk: apps that acquire a reputation for fake profiles lose users.

Hence many argue platforms must proactively validate accounts rather than waiting for users to flag fakes. Some have already taken steps. For example:

- Bumble introduced a “Deception Detector” model claimed to block up to 95% of fake/spam accounts.

- Some apps are experimenting with video selfie verification or liveness checks to confirm identity. Wired reported that Hinge was working on “Selfie Verification” to compare live video to profile photos.

- Besedo (a moderation platform) uses AI-based pattern recognition to flag accounts with suspicious behavior: rapid messaging, repeated content, etc.

Yet the existence of such systems does not guarantee perfection. Many fakes still leak through, verification processes are sometimes weak, and scaling manual review is costly.

2. The user’s role

Some contend users must shoulder part of the burden:

- Skeptical inspection: comparing photos, video calls, requesting live images.

- Reporting suspicious profiles aggressively.

- Being cautious about sharing personal data or financial assistance.

But expecting all users to have the vigilance, technical literacy, or time to detect AI deception is unrealistic. Many are vulnerable, emotionally invested, or simply want to trust what they see.

3. A shared model: regulation, standards, and third-party audits

A middle ground is to advocate a shared responsibility model:

- Regulation and accountability: Governments or regulatory bodies could require dating platforms to meet minimum authentication standards (e.g. identity verification, fraud audits, transparency around moderation).

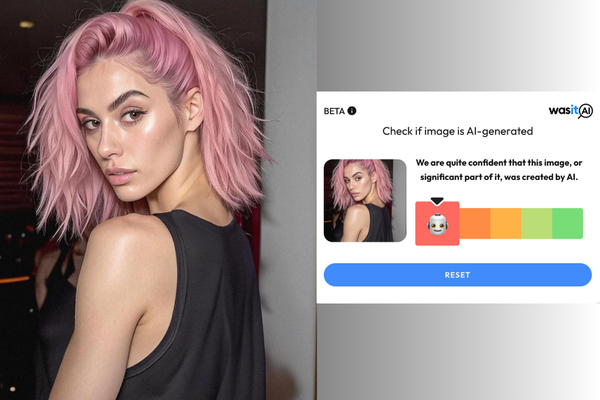

- Third-party validators: Independent or third-party AI detection services (like WasItAI, which we’ll discuss) could audit or certify platforms’ profiles.

- Community oversight: Platforms could integrate community flagging plus incentive structures for verified, long-standing users.

Ultimately, the fastest path to restoring user trust is likely a model where dating apps bear the primary responsibility, but operate under oversight and transparency so that users and third parties can hold them accountable.

A potential tool: AI detectors such as Wasitai

One promising technical solution is to embed AI detectors in the verification and moderation chain. A detector like Wasitai (an example you mentioned) aims to spot synthetic or AI-generated images by analyzing subtle artifacts, noise patterns, or inconsistencies in lighting, texture, or facial realism.

Here’s how such detectors might help:

- Pre-screening at registration: When a user uploads profile images, the detector can flag suspicious ones before the profile becomes visible.

- Ongoing monitoring: As users add new photos, change images, or rearrange their profile, the detector can re-evaluate.

- Remove or mark uncertain profiles: Profiles with a “gray zone” score could be subject to manual review or temporarily hidden until cleared.

- Public transparency / trust indicators: The app could visibly tag “verified non-synthetic” profiles, giving honest users a trust signal.

- Plugin or audit service: Wasitai or similar services could act as external auditors, scoring or certifying a dating app’s authenticity level.

Of course, no detector is perfect – adversarial actors may try to fool detectors, and false positives are a risk (i.e. real users rejected). But as AI synthesis tools get more advanced, detection is becoming a must. Without it, platforms risk being outpaced by the deception tools.

In short, incorporating detectors like Wasitai should be part of the toolkit – not a magic bullet but a crucial line of defense.

Why dating apps must act – and fast

If platforms delay action, they face real danger:

- User attrition: Disillusioned users will migrate to other apps or ditch online dating entirely.

- Brand damage: Public backlash, media stories of scams, loss of trust.

- Regulatory pressure: As scams proliferate, regulators may impose tougher rules, fines, or oversight mandates.

- Network effect collapse: Dating apps rely heavily on active user bases; a drop below critical mass diminishes value for all.

Dating apps are in a race: either lead in authenticity or watch their ecosystems degrade into breeding grounds for deception.